KT and Scholarship (click here for slides)

On September 30th 2014 I was invited to address a distinguished group of senior leaders in academia, health, and philanthropy at an evening reception for the Institute of Advanced Studies at the University of Western Australia. This blog post is derived from my remarks that evening.

In 2003, The Commonwealth Fund Task Force on Academic Health Centers in the United States published a report (1) affirming that academic health centres have “enjoyed an important public trust as recipients of enormous amounts of public funding for biomedical research” (viii). One could extend this assertion to institutions of higher learning more broadly. As research institutes and universities continue to play a pivotal role in the biomedical revolution – and in the development of all areas of research – the pace of change continues to accelerate and opportunities to apply new knowledge and to manage knowledge more efficiently continue to grow. The report went on to acknowledge that these organizations will face new challenges, specifically that they will be called upon to demonstrate that not only are they efficient producers of new knowledge but that they can apply that knowledge effectively, partner with non-academic institutions, and accomplish their goals in ways that meet public expectations.

The scale, scope and dynamic nature of social and economic burdens also demand that these organizations excel in the sharing of research-based knowledge with all knowledge users; that is to say, with members of the public, with communities, with policy makers, in addition to scientists and academics. This shift, from a paradigm of the old adage, “knowledge is power” toward a new vision, “sharing knowledge is empowerment,” (2) will enable us to make more significant contributions to the well-being of mankind; it will accelerate our impact, and build stronger connections to society – all of which will strengthen the relevance of our universities and academic institutions for our time.

In thinking about scholarship, academic promotion, and the role of knowledge translation in these processes, it is useful to consider how universities have evolved in the last couple of centuries, and for this account I acknowledge the work of Cheryl Maurana (3) and Ernest Boyer (4). According to Boyer’s, scholarship in the United States higher education system has progressed through three discrete, yet overlapping stages. In the first stage, the 17th century colonial colleges focused on building the character of students and producing graduates who were prepared for civic and religious leadership. Teaching was considered a vocation like the ministry.

This was the dominant perspective until the mid-19th century, where it evolved to the second stage characterized by universities’ growing focus on the practical needs of a growing nation. Universities saw themselves as having a direct role in supporting the nation’s business and economic prosperity, and in supporting the agricultural and mechanical revolutions. Education during this time was for ‘the common good.’ By the late 1800s, education was to be of practical utility and was about the application of knowledge to real problems.

The third stage of scholarly activity was characterized by an emphasis on basic research. Many scholars, having been educated in Europe, were intent on developing research institutions focused on research and graduate education, modeled after the German research universities. Emphasis on teaching undergraduates or providing service decreased. The Second Wold War accelerated the focus on research as the academic priority and Federal dollars were directed to universities for their scientific endeavors. After the war, this government support continued and the focus on scientific progress was firmly rooted.

This evolution of purpose has effectively narrowed the criteria for evaluating faculty scholarship, which means, it has shaped – and limited – what we do in these roles. Promotion and tenure depend on conducting research and publishing results. Equating research and publication with scholarship and promotion has resulted in some unintended and limiting consequences. Firstly, it has created a culture in which academia is disconnected from the real world problems of contemporary society and has failed to recognize the key role of non-academics in applying this research to address the growing number and complexity of social, economic, and environmental concerns. We’ve become detached from what’s going on out there, and we are only now re-engaging with communities and knowledge users in a way that necessitates a new paradigm for scholarship, and relatedly, and new way of assessing scholarly work.

In their report on Scholarship in Public (5), Julie Ellison and Timothy Eatman quote the President of the Association of American Colleges and Universities, Carol Schneider, describing her view of what’s wrong with the promotion system: “Picture a figure eight; a flattened figure eight, turned on its side. The left-side loop represents the academic field—with its own questions, debates, validation procedures, communication practices, and so on. The right-side loop represents scholarly work with the public—with community partners, in collaborative problem-solving groups, through projects that connect knowledge with choices and action. Our problem is that scholarly practice is organized to draw faculty members only into the left-side loop. The reward system, the incentive system, our communication practices—all are connected with the left side only. Work within the right-side loop is discouraged, sometimes quite vigorously. Our challenge, then, is to revamp the terrain so that the reward system supports the entire figure eight, and especially scholarly movement back and forth between the two loops in the larger figure. Left-loop work ought to be informed and enriched by work in the right-side loop, and vice versa. Travel back and forth should be both expected and rewarded” (page x).

A second limiting consequence is that we have developed tunnel vision regarding who we share our findings and discoveries with. The predominant methods for sharing what we learn is through peer-reviewed publications and conferences, both of which are knowledge translation strategies uniquely targeted to academic audiences. We do this because we want to contribute to the building blocks of scientific and academic discovery, but it has the unintended consequence of building walls between us and everyone else out there who could benefit from our work or who could contribute to solutions. In the words of Jonathan Lomas, it is no longer sufficient to be the authors of cutting edge research because in its raw form, “research information is not usable knowledge” (6). To be truly poised to improve health and well-being requires that we manage our research knowledge base more efficiently – sharing our tacit knowledge within our organizations and sectors in which we work, as well as beyond our walls, with knowledge users who desire it and who are poised to act on it – with communities, service providers, educators, and government policy and decision-makers, and with the general public who are often times both the beneficiaries and benefactors of our research dollars.

A related consideration is that hoarding what we know presumes that only academics can solve the worlds’ problems. Rarely, however, are we in positions to implement our innovations and discoveries from where we sit, and so to ensure our work has an impact out there, we need to expand the audiences with whom we share our work and the partners with whom we conduct the work. New evidence from open innovation crowd-sourced solutions like Innocentive, have shown us that difficult problems are solved more quickly when we can mobilize a large pool of expertise; they’ve also shown that solutions most often come from outside the discipline (7).

Whereas traditional innovation – writing papers, speaking at conferences, working in isolated cliques – occurs within the four walls of the enterprise and relies on internal experts, open innovation acknowledges that problem solvers and knowledge are widely dispersed and may reside outside the enterprise. We need to shift from traditional innovation which is often practiced by rigid cultures that focus too much on who solves problems, to embrace innovation in more open and collaborative cultures that focus on finding solutions to challenges. This means expanding how we define scholarship, how we document it, and consequently, how we behave as academics.

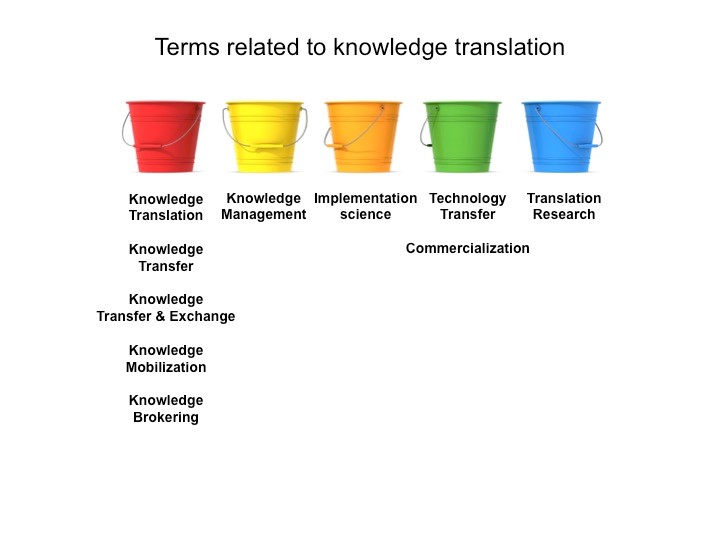

And it’s not just who we share with but how we share. Because writing a paper is not an effective way to get people to change what they do, we need to expand our knowledge translation competencies to include a range of strategies that can serve a variety of goals – everything from sharing knowledge, to building awareness, changing practice and behaviour, informing policy, and moving innovations to market for commercialization. These are all the purview of the modern day scholar. There is clear evidence that merely producing research and publishing in journals does little to change practice, and ultimately, does little to improve health and well-being. To have the impact we desire, science must ensure that research results translate to effective evidence-based practices in health care, in education, in social services. In the words of Jonathan Lomas, “excellence in research is laudable, but unless we can impact health and well-being, it presents an incomplete effort.” (8)

That a great many academics do little more than share with other academics using traditional pathways speaks to the fact that for the most part, we are only recognized for these efforts – that’s what counts as scholarship in academia. Traditional methods for translating research knowledge continue to form the basis for professional advancement through academic promotion. Consider this data I collected from scientists in the Child Health Evaluative Sciences program of our Research Institute in 2002 (9). Though a small sample, you can see where most of their knowledge translation activities lie.

We count indicators of scholarship such as number of grants, funding dollars, publications in prestigious journals, and presentations – such as this one, as well as excellence in teaching and mentorship and service to our institution. These indicators remain relevant, and to be sure, I am not suggesting to you that we need to dismiss with them. What I am saying is that greater balance is required with a new range of metrics – a new paradigm of what counts as scholarship, because our traditional academic metrics have proved insufficient for achieving impact and have not kept pace with the evolving role of the academic, or with the demands of our changing world. More precisely, the number of grants you receive or the number of academic publications you accrue does little to demonstrate that the wide range of knowledge users for whom your work is relevant, actually understand what you discovered or knew what to do with the knowledge you generated.

To borrow an expression from Chip and Dan Heath, counting grants and publications is TBU – true but useless (10), from an impact perspective. And therein lies the problem. Counting number of publications and grants is an easy way to capture our productivity relative to other academics – the left hand side of the figure eight. Documenting how our science has impacted real world problems and the well-being of others is less easy, but nonetheless entirely feasible, and we have a moral, ethical, and fiscal obligation to do so.

So how do we redefine scholarship? In 1990, Ernest Boyer was commissioned by the Carnegie Foundation for the Advancement of Teaching to examine the meaning of scholarship in the United States. Boyer assessed the roles of faculty in the US, and how these roles related to both the faculty reward system and the mission of higher education. And in this way, he created a new paradigm for scholarship that has been adopted by many institutions.

Boyer put forth four interrelated dimensions of scholarship:

- Discovery; the inquiry directed to the pursuit of new knowledge

- Integration: making connections among disciplines and adding new insights

- Application: asking how knowledge can be applied to the social issues of the times

- Teaching: transmitting knowledge but also transforming and extending it as well. What we would now conceptualize as teaching and knowledge translation.

Building on Boyer’s work, Charles Glassick (11) was charged to determine the criteria used to evaluate scholarly work, from which emerged a set of standards for assessment:

- Clear goals

- Adequate preparation

- Appropriate methods

- Significant results

- Effective presentation

- Reflective critique

A similar perspective comes from Diamond and Adam (12) . The activity requires a high level of expertise; The activity breaks new ground or is innovative; The activity can be replicated and elaborated; The work and its results can be documented; The work and its results can be peer reviewed; The activity has significance or impact

These standards form the basis of the model of community engaged scholarship. “Community engaged scholarship is teaching, discovery, integration, application and engagement; clear goals, adequate preparation, appropriate methods, significant results, effective presentation, and reflective critique that is rigorous and peer-reviewed.“ It is reflected in scholarship that involves the faculty member in a mutually beneficial partnership with the community.”

Community-engaged scholarship overlaps with the traditional domains of research, teaching, and service and an approach to these three domains which is often integrative. As illustrated in this figure (13), approaches such as community-based participatory research (CBPR) and service-learning (SL) represent types of community-engaged scholarship that are consistent with the missions of research, teaching and service.

Engagement differs from dissemination or outreach. Engagement implies a partnership and a two way exchange of information, ideas, and expertise as well as shared decision making and collaboration in the efforts required for implementation and uptake

How is this activity documented? Documentation is how a scholar presents evidence of her activities in a dossier; the evidence presented is about behaviours, activities, and qualities of that work.

For all of the reasons presented thus far, we have seen a shift in paradigm, mainly in North America, building on the work of Ernest Boyer and Charles Glassick. This shift has not been without its challenges. Science continues to be hierarchical, and as a social scientist situated in the health sciences research institute alongside basic scientists, I still encounter evidence of that hierarchy – but less so, and it is no longer openly sanctioned as politically correct in some academic circles, particularly as informed and visionary individuals take up leadership positions throughout the university.

There are also national challenges to sharing research. Consider a piece published in the Lancet Global Health in May of this year. In it, the author – Chris Simms, writes “Canada’s reputation is further undercut by its silencing of government scientists on environmental and public health issues: scientists are required to receive approval before they speak with the media; they are prevented from publishing; and, remarkably, their activities are individually monitored at international conferences.3 These actions have outraged local and international scientific communities. A survey done in December, 2013, of 4000 Canadian federal government scientists showed that 90% felt they are not allowed to speak freely to the media about their work, and that, faced with a departmental decision that could harm public health, safety, or the environment, 86% felt they would encounter censure or retaliation for doing so” (14).

Take a moment to reflect on your own bias – both personal and organizational. What stands as scholarly and counts for promotion in your institution?

- Consider an investigator who studies pathways to care in early episode psychosis using qualitative methods, and publishes and presents her work to academic audiences (15).

- And if her presentations are made in schools, to audiences of teachers and students, is that scholarship?(15)

- And if she works with a choreographer to translate the main findings of her research to an original dance choreography and engages a troupe of dancers to communicate her research findings to students, is that scholarship? (15)

How many of you still have your hands up at this point in the story?

- What about a scientist in pediatric pain who develops an app that encourages children to recount their pain experiences? (16)

- What about a scientist who develops an animated vignette to share key research finding with practitioners; is that scholarship? (17)

Let’s look more closely at what this new paradigm of scholarship looks like. Different models have emerged from the work of Boyer and Glassick, variations on the standards proposed. And in the essence of time, I will review how we have operationalized this new scholarship in the Department of Psychiatry and the Faculty of Medicine at the University of Toronto (18).

In our model, traditional concepts of teaching, research and service have been replaced with a new scholarship paradigm encompassing learning, discovery, and engagement. We still assess excellence in teaching and research. But our new paradigm also includes scholarly activities defined as Creative Professional Activity. Creative professional activity is included in scholarly activities to be considered in promotion decisions. The Faculty of Medicine at the University of Toronto recognizes CPA under the following three broad categories.

Professional Innovation and Creative Excellence which includes the making or developing of an invention, development of new techniques, conceptual innovations, or educational programs inside or outside the University (e.g. continuing medical education or patient education). To demonstrate professional innovation, the candidate must show an instrumental role in the development, introduction and dissemination of an invention, a new technique, a conceptual innovation or an educational program. Creative excellence, in such forms such as biomedical art, communications media, and video presentations, may be targeted at various audiences from the lay public to health care professionals.

Contributions to the Development of Professional Practices. In this category, demonstration of innovation and exemplary practice will be in the form of leadership in the profession, or in professional societies, associations, or organizations that has influenced standards or enhanced the effectiveness of the discipline. Membership or the holding of office in professional associations is not itself considered evidence of creative professional activity. Sustained leadership and setting of standards for the profession are the principal criteria to be evaluated. Both internal and external assessment should be sought. The candidate must demonstrate leadership in the profession, professional organizations, government or regulatory agencies that has influenced standards and/or enhanced the effectiveness of the discipline. Membership and holding office in itself is not considered evidence of CPA. Examples of contributions to the development of professional practice may include (but are not limited to) guideline development, health policy development, government policy, community development, international health and development, consensus conference statements, regulatory committees, and setting of standards.

Exemplary Professional Practice is that which is fit to be emulated; is illustrative to students and peers; establishes the professional as an exemplar or role-model for the profession; or shows the individual to be a professional whose behaviour, style, ethics, standards, and method of practice are such that students and peers should be exposed to them and encouraged to emulate them. To demonstrate exemplary professional practice, the candidate must show that his or her practice is recognized as exemplary by peers and has been emulated or otherwise had an impact on practice.

Community work becomes scholarship when it demonstrates current knowledge of the field, current findings, and invites peer review. The community work should be public, open to evaluation, and presented in a form others can build on.

So, what are the new metrics or criteria for assessing this type of scholarship? They include:

- Clear Goals, clearly stated and jointly defined by community and academics; that are developed in partnership and based on community needs; and reflect an issue that both community and academia think is important.

- Adequate Preparation; evidence that the scholar has the requisite knowledge and skills to conduct assessment and implement the research, and has laid groundwork for program based on most recent work in the field.

- Appropriate methods, developed with partner involvement, a feasible approach, and significant results.

- Effective presentation (knowledge translation), with documentation that the work of the partnership been reviewed and disseminated in community and academic institutions; that presentations and publications have occurred at both community and academic levels; and that the results have been disseminated in a wide variety of formats appropriate for community and academic audiences.

Lastly, we need to see evidence of ongoing reflective critique – that the work has been evaluated; that the scholar thinks and reflects on her work; and we consider whether the community would work with this scholar again, and whether the scholar would engage the community again.

At the University of Toronto:

- CPA may be linked to Research to provide an overall assessment of scholarly activity.

- Contributions must be related to the candidate’s discipline and relevant to his/her appointment at the University of Toronto.

- There should be evidence of sustained and current activity.

- The focus should be on creativity, innovation, excellence and impact on the profession, not on the quantity of achievement.

- There must be evidence that the activity has changed policy-making, organizational decision-making, or clinical practice beyond the candidate’s own institution or practice setting, including when the target audience is the general public.

- Contributions will not be discounted because they have led to commercial gain, but there must be evidence of scholarship and impact on clinical practice.

Due to the variable activities included under CPA, there may be diverse, and sometimes innovative markers used to indicate the impact of the CPA. Evidence upon which CPA will be evaluated can include a wide range of activities; some of which you will recognize as more traditional ones but others that will seem novel to you.

Scholarly publications: papers, books, chapters, monographs

Non peer-reviewed and lay publications

Invitations to scholarly meetings or workshops

Invitations to lay meetings or talks/interviews with media and lay publications

Invitations as a visiting professor or scholar

Guidelines and consensus conference proceedings

Development of health policies

Presentations to regulatory bodies, governments, etc.

Evaluation reports of scholarly programs

Evidence of dissemination of educational innovation through adoption or incorporation either within or outside the university

Evidence of leadership that has influenced standards and /or enhanced the effectiveness of health professional education

Creation of media (e.g., websites, CDs)

Roles in professional organizations (there must be documentation of the role as to whether the candidate is a leader or a participant)

Contributions to editorial boards of peer-reviewed journals (including Editor-in-Chief, Associate Editor, and board member)

Documentation from an external review

Unsolicited letters

Awards or recognition for CPA role by the profession or by groups outside of the profession

Media reports documenting achievement or demonstrating the importance of the role played

Grant and contract record, including evidence of impact on activity of industry clients

Innovation and entrepreneurial activity, as evidenced by new products or new ventures launched or assisted, licensed patents

Technology transfer

Knowledge transfer

Elsewhere, other institutions refer to this type of activity as community engaged scholarship or community scholarship, to capture the products from active, systematic engagement of academics with communities for such purposes as addressing a community-identified need, studying community problems and issues, and engaging in the development of programs that improve outcomes.

The document referenced here (19; slide25) provides edited or distilled information from the websites of several institutions and entities that have recognized and seek to reward community-engaged scholarship (CES). Most are health science schools or departments. Three are not, one represents an entire university, one a social science department and the other a national body. Good examples to explore.

Similar models are used elsewhere in North America; this is not a complete list (see slide 26).

There are very good resources describing these promotion practices, and illustrating how to document and assess them.

In their book on how to change things, Chip and Dan Heath (10) say that if you want things to change, you’ve got to appeal to both the rider – our rationale side, and the elephant – our emotional side. The rider provides the planning and direction, and the elephant provides the energy and motivation.

Many organizations have paved the way and what remains is for you to apply this in your own institution – find the feeling, grow your people and systems, and rally the herd. My goal today was to point out a new destination for scholarship and academic promotion that fully considers a range of knowledge users and engaged strategies for sharing academic work. I have highlighted some of the ‘bright spots’ (10) – successful efforts that are worth emulating, and I have scripted some of the ‘critical moves’ (10) or elements of this new paradigm by sharing how some universities have defined and operationalized them.

Rally the herd at the university and research institutes

Here’s what you need to do to get to your new destination:

- Build on the work I’ve described and the new models shared to develop better methods to evaluate promotion and tenure practices that are inclusive of community scholarship in your own institution. Key to this process is the development of specific descriptions for faculty who are involved in this type of work, whether you call it creative professional activity as we do, community scholarship, or community engaged scholarship. Develop definitions that are meaningful in your context, and include standards of assessment, products, methods of documentation, and examples of faculty CV. There are some examples available to get you started, as I’ve shown you, but likely need to be personalized to your own institutional context.

- Develop a national network of senior faculty in the field of community scholarship who can serve as mentors for other faculty and as models for junior faculty as they go forward for promotion.

- Cultivate and educate administrative leaders, senior faculty, and leaders of national associations and funding bodies to serve as champions for community scholarship and to advocate for policy change

- Seek out and disseminate toolkits developed in support of community scholarship.

Grow your systems. Research funders are also important drivers for change, for if academics are going to expand their scholarly work, it needs to be funded. In Canada, the Canadian Institutes for Health Research, and provincial funding bodies in Alberta and British Columbia have been important drivers for growth in KT and implementation science by way of tailored RFPs for science in this area that specifically request joint research leadership between nominated principal applicants and nominated principal knowledge users, and by allowances for KT activities in research budgets (not only for travel and open access publications).

Australasian funding bodies could broaden and deepen their appreciation of knowledge translation by moving beyond the clinical guideline, toward appreciating the multiple forms of partnership, engagement, and KT strategies that better exemplify the scope of activities designed to create real world impacts from the science it funds. These changes are low lying fruit; they are entirely feasible and critical in order to realize real change in practice stemming from effective KT and partnerships.

Lastly, grow your people. The Hospital for Sick Children has two professional development opportunities that I’ve developed along with our KT team in the SickKids Learning Institute (20). Evaluations show they are highly regarded, valued, and effective for building knowledge and skills.

The Scientist Knowledge Translation Training course (SKTT) is run in Toronto and across Canada, and we’ve also taken it to the US, and Scotland. It’s a 2-day training course intended for health science researchers across all four scientific pillars (basic, clinical, health services, population health), who have an interest in sharing research knowledge with audiences beyond the traditional academic community, as appropriate and in increasing the impact potential of their research. The course is also appropriate for KT professionals, policy and decision-makers, and educators, and researchers in other areas of science. I am running one this week here at the university, and we are looking to bring this course to Australia in partnership with KT Australia in 2015.

The Knowledge Translation Professional Certificate (KTPC) is a five-day professional development course and the only course of its kind in North America. The curriculum, presented as a composite of didactic and interactive teaching, exemplars, and exercises, focuses on the core competencies of KT work in Canada, as identified by a survey of KT Practitioners (21). This is one-of-a-kind opportunity for professional development and networking, and is run in Toronto three times a year with a larger faculty. It too is highly subscribed and very well regarded, and it’s also very hard to get into as it sells out in 15 minutes each time we open registration.

And so, in closing, I leave you with a new destination to contemplate but also with some concrete actions to get you started on your journey. Committing to these recommendations will require you to have courage to challenge the status quo, and be willing to look beyond traditional reward systems and take risks to redefine them. It will require crafting a shared vision, and the commitment to identify leading scholars who can serve as role models and mentors. And I remind you that in the end, the number of grants we get, the number of research studies we do or the proliferation of publications produced matters little if they do not improve practice, health, and well-being. What we really want to get at is not how much research we have done, but how many lives are improved as a result of what we have accomplished.

References

(1) The Commonwealth Fund Task Force on Academic Health Centers. (2003). Envisioning the future of academic health centers. Web document: www. cmwf.org.

(2) Rifkin SB & Pridmore P. (2001). Partners in planning: Information, participation, and empowerment. London UK: Macmillan Education Ltd, London.

(3) Maurana CA, Wolff M, Beck BJ & Simpson D. (2001). Working with our communities: moving from service to scholarship in the health professions. Education for Health, 14(2), 207-220.

(4) Boyer EL. (1996). Scholarship reconsidered: priorities of the professoriate. Princeton NJ: Carnegie Foundation for the Advancement of Teaching.

(5) Ellison J & Eatman TK. (2008). Scholarship in public: knowledge creation and tenure policy in the engaged university. Syracuse NY: Imaging America.

(6) Lomas J. Finding Audiences, Changing Beliefs: The Structure of Research Use in Canadian Health Policy. Journal of Health Politics, Policy and Law. 1990;15:525–42. [PubMed]

(7) Lakhani K, Bo L, Jeppesen P, Lohse A, & Panetta J. (2007). The value of openness in scientific problem solving. HBR Wokring Paper. From the web: http://www.hbs.edu/faculty/Publication%20Files/07-050.pdf

(8) Lomas J.

(9) Barwick, M. (2002). Development of a knowledge translation strategy for population health sciences. Toronto, ON: The Hospital for Sick Children.

(10) Heath C & Heath D. (2010). Switch: how to change when change is hard. Toronto: Random House.

(11) Glassick, CE, Huber MT, & Maeroff GI. (1997). Scholarship assessed: Evaluation of the professoriate. The Carnegie Foundation for the Advancement of Teaching. San Francisco, CA: Jossey-Bass, Inc.

(12) Diamond R. & Adam, B. (1993). Recognizing faculty work: Reward systems for the year 2000. San Francisco, CA: Jossey-Bass.

(13) Commission on Community-engaged scholarship in health professions. Linking scholarship and communities.(2005). Seattle WA: Community-Campus Partnerships for Health.

(14) Simms, C.D. (May 2014). A rising tide: the case against Canada as a world citizen. The Lancet Global Health, Volume 2, Issue 5, Pages e264 – e265.

(15) Boydell, K. (2011). Using performative art to communicate research: dancing experiences of psychosis. Canadian Theatre Review, 146.

(16) Dr. Jennifer Stinson, Hospital for Sick Children. http://www.campaignpage.ca/sickkidsapp/

(17) Dr. Melanie Barwick, The Hospital for Sick Children https://www.youtube.com/channel/UC21ckQoBd5tf4qO6Rv1WWjQ

(18) University of Toronto Department of Psychiatry Promotions. See: http://www.psychiatry.utoronto.ca/faculty-staff/faculty-promotions/senior-promotions/academic-pathways-creative-professional-activity/

(19) http://depts.washington.edu/ccph/pdf_files/Developing%20Criteria%20for%20Review%20of%20CES.pdf

(20) http://www.sickkids.ca/Learning/AbouttheInstitute/Programs/Knowledge-Translation/index.html

(21) Barwick M, Bovaird S & McMillan K. (under revision). Building capacity for knowledge translation practitioners in Canada. Evidence & Policy.

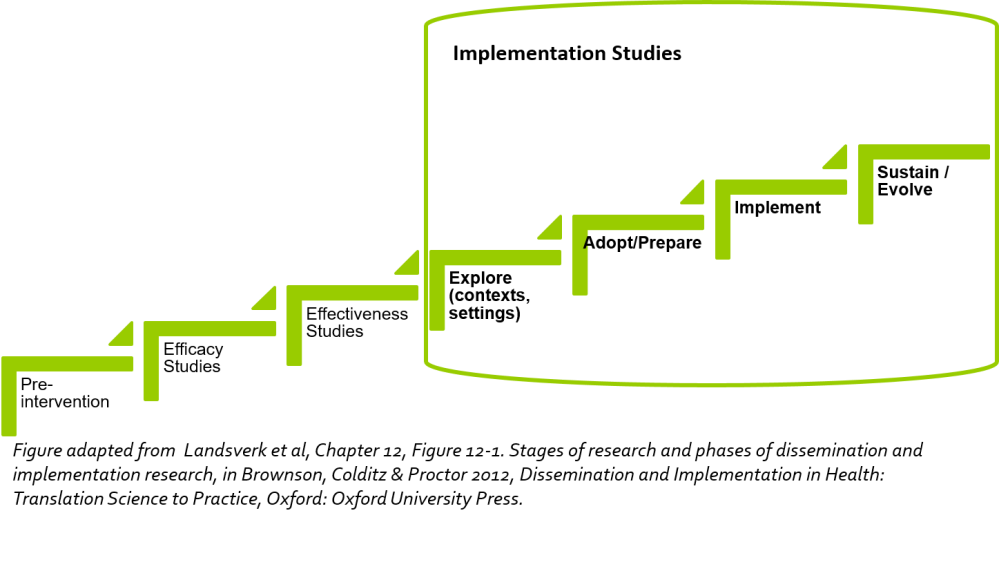

When we seek to change practice, behaviour, or policy, we enter the subspecialty of implementation science, defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services and care” (Eccles & Mittman, 2006). Note that the emphasis on the purpose or KT goal is “to promote the systematic uptake”. This presumes the research evidence we are sharing has instrumental use and is ethically ready for application and scale up. Implementation science does not capture the entire spectrum of knowledge translation goals and activities, and hence, the terms are related but not synonymous.

When we seek to change practice, behaviour, or policy, we enter the subspecialty of implementation science, defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services and care” (Eccles & Mittman, 2006). Note that the emphasis on the purpose or KT goal is “to promote the systematic uptake”. This presumes the research evidence we are sharing has instrumental use and is ethically ready for application and scale up. Implementation science does not capture the entire spectrum of knowledge translation goals and activities, and hence, the terms are related but not synonymous. The context surrounding the knowledge translation communication involves what we reasonably know from the evidence, being mindful of its’ potential use (conceptual, symbolic, instrumental), that it is shared ethically, and with awareness of how the knowledge user may access, understand, and benefit from it.

The context surrounding the knowledge translation communication involves what we reasonably know from the evidence, being mindful of its’ potential use (conceptual, symbolic, instrumental), that it is shared ethically, and with awareness of how the knowledge user may access, understand, and benefit from it.

Implementation focuses on taking interventions that have been found to be effective using methodologically rigorous designs (e.g., randomized controlled trials, quasi-experimental designs, hybrid designs) under real-world conditions, and integrating them into practice settings (not only in the health sector) using deliberate strategies and processes (Powell et al., 2012 ; Proctor et al., 2009; Cabassa, 2016). Hybrid designs have emerged relatively recently to help us explore implementation effectiveness alongside intervention effectiveness to different degrees (Curran et al, 2012).

Implementation focuses on taking interventions that have been found to be effective using methodologically rigorous designs (e.g., randomized controlled trials, quasi-experimental designs, hybrid designs) under real-world conditions, and integrating them into practice settings (not only in the health sector) using deliberate strategies and processes (Powell et al., 2012 ; Proctor et al., 2009; Cabassa, 2016). Hybrid designs have emerged relatively recently to help us explore implementation effectiveness alongside intervention effectiveness to different degrees (Curran et al, 2012). Fundamental Considerations for Evidence Implementation

Fundamental Considerations for Evidence Implementation